John Lennon as Gollum? What AI means for the future of Hollywood

The Beatles in The Lord of the Rings, Wes Anderson directing The Bible… Fake AI movies might be the end of cinema – or a new beginning

In the mid-1960s, the Beatles approached Stanley Kubrick with an idea for a film: a rock and roll musical adaptation of The Lord of the Rings. The bandmates trouped up to Kubrick’s estate near St Albans and made their case to the director: they’d even worked out who would play who, with Paul as Frodo, John as Gollum, George as Gandalf, and Ringo as Sam Gamgee.

Sadly, the project quickly became one of British cinema's great what-ifs. Kubrick turned them down, believing Tolkien’s book to be unfilmable – which, given he was about to make 2001: A Space Odyssey, was really saying something – while their record label Apple’s approach to Tolkien for the screen rights was also rebuffed.

Yet two weeks ago, 24 frames from this unmade film surfaced online. There was Paul as Frodo, gazing pensively from a castle gate in a fleece tunic – and there was a grinning John emerging from a river, in a mossy bodysuit and crown of pond weeds. George, thickly bearded, was shown in a scarlet wizard’s robe trimmed with gold and another of white sheepskin; elsewhere a circle of minstrels played strange medieval instruments on a clifftop, and a coven of nine Ringwraiths brooded on a moor, black cloaks rippling in the wind.

The scenes really do look like they were captured by a film crew in Britain in the 1960s (though perhaps not one led by Kubrick). But they were in fact the work of Midjourney, a piece of artificially intelligent software which spits out photorealistic images from text prompts fed to it by users.

The Beatles Lord of the Rings shots were created – or arguably solicited – by the Los Angeles-based music video director Keith Schofield, whose other Midjourney requests include scenes from Tim Burton’s abandoned comic book blockbuster Superman Lives, starring Nicolas Cage, and an entirely fictional 1980s David Cronenberg science-fiction horror titled Galaxy of Flesh.

There’s little doubt that AI images are now plausible enough to fool both experts and the general public. Earlier this week, the German artist Boris Eldagsen turned down his Sony world photography award after revealing his prizewinning portrait of two women was AI-generated, while last month a fake picture of the Pope in a white puffer jacket (also courtesy of Midjourney) went viral on social media, where it was widely assumed to be a genuine snapshot.

But now that uncannily convincing images can be extracted from these programmes by anyone with an idea and an internet connection, does that mean Hollywood-grade image-making is now within the reach of ordinary members of the public?

Last week, the first animated short film to have been entirely designed and drawn by an AI tool was released online. Titled Critterz, it’s a spoof wildlife documentary in which an unseen David Attenborough-type squabbles with a series of cute, furry monsters in a forest. The script was written by Chad Nelson, an artist from San Francisco, but both the monsters themselves and their environments were dreamt up by the AI tool DALL-E, which responded to Nelson’s written descriptions of what the characters and backgrounds should look like.

Yes, it then took six human voice actors, six animators (including Nelson) and seven more credited crew members to bring DALL-E’s output to life. And yes, the result is hardly on a par with Pixar, or even Minions. But it’s superficially polished enough to pass as the sort of work that would take even a fairly experienced studio around six months to write, design and render. And Nelson and his skeleton staff whipped it up in a matter of weeks.

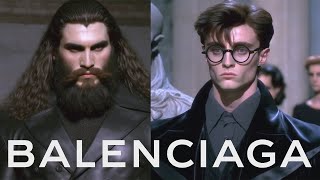

What’s more, much of the work carried out by that staff can technically be done by other AI programmes – albeit slightly amateurishly, for the moment. YouTube is currently awash with viral clips of spoof Balenciaga fashion campaigns featuring the stars of blockbuster franchises such as Marvel and Harry Potter, all of which have been built with minimal human involvement.

A tutorial shows aspiring online humorists how to use the text AI tool ChatGPT to compile lists of popular characters, match them to the fashion house’s outfits from any given year, then write prompts which can be fed into Midjourney to create the corresponding images. Other AI tools can then be used to vocalise and lip-sync dialogue – “You’re Balenciaga, ‘Arry”, delivered by a hunky Hagrid in a leather trench coat, and so on – and hey presto: a video with a potential audience of millions for almost zero creative or technical effort.

In that light, the coming boom in AI filmmaking might superficially resemble the bedroom pop revolution of the 2010s, when young aspiring musicians like Billie Eilish were suddenly able to replicate an expensively honed studio sound at their desks.

But there’s a crucial difference. Where bedroom pop allowed amateurs to polish their own ideas to a professional sheen, AI technically just reshapes other people’s ideas according to the user’s brief. The images produced by the likes of Midjourney are all extrapolated and reconstituted from millions of already-existing photographs and artworks, all of which were created by humans, and many of which will be copyrighted.

An ongoing project to make a series of short animated Pinocchio films in Midjourney hit an early snag when requests for a Pinocchio design only yielded recognisably Disney-like versions of the character: more lateral thinking was required in the text prompts in order to talk the system into coming up with something less nakedly plagiarised. But even Midjourney’s freshest, most striking ‘ideas’ are all ultimately culled from a vast reservoir of original, man-made artwork: it’s just reassembling other people’s ideas in ways you haven’t necessarily seen or thought of.

One currently popular AI cheap thrill is asking the system to dream up new films made in the style of a distinctive contemporary director – for some reason, usually Wes Anderson. And the results are exactly what you’d expect. A Midjourney request this week for an Anderson-style Biblical epic yielded a Ralph Fiennes-like Christ in a tasteful salmon pink robe, an Owen Wilson type as Noah, standing by an ark that resembled an old New York City municipal ferry, and Moses leading the Israelites out of Egypt in a butter yellow linen suit.

But the pictures are so flatly obvious, they’re essentially artistically worthless: since we know they aren’t actually Anderson’s takes on Bible stories, they’re only interesting as a sort of digitally puréed homage. See also the frames of an imaginary alternate version of the 1980s Disney sci-fi classic Tron made by the Chilean surrealist Alejandro Jodorowsky, which were breathlessly circulated online before Christmas.

Even so, Hollywood will be watching advances in the field with interest: the studios are well aware they can’t afford not to keep pace. After the 2004 release of the then-borderline-experimental Sky Captain and the World of Tomorrow – the original six-minute demo reel for which director Kerry Conran made entirely in his garage – look at how quickly the green-screen-draped ‘digital backlot’ became a standard part of the blockbuster toolkit. Might AI prove to be a similar artistic and technical milestone – powerful and even magical in the right hands, but dreary and alienating when misused?

We’ll see for ourselves soon enough. For some scenes in this summer’s fifth and final Indiana Jones film, the now-80-year-old Harrison Ford was digitally de-aged by an AI system that had ingested all of Lucasfilm’s footage of the actor from the 1970s and 1980s.

It's a very different approach from the painstakingly rendered digital masks used in Martin Scorsese’s The Irishman, and one that heralds AI’s mainstream arrival: the visual effects house Industrial Light and Magic, which worked on Indy 5, is currently utilising AI on more than 30 forthcoming films and TV series. The question now – excitingly, and perhaps also scarily – is how much of it we’ll actually notice.